Computer Vision : Semantic segmentation of RGB-D images of plant organs

My Github repository for this project: click here

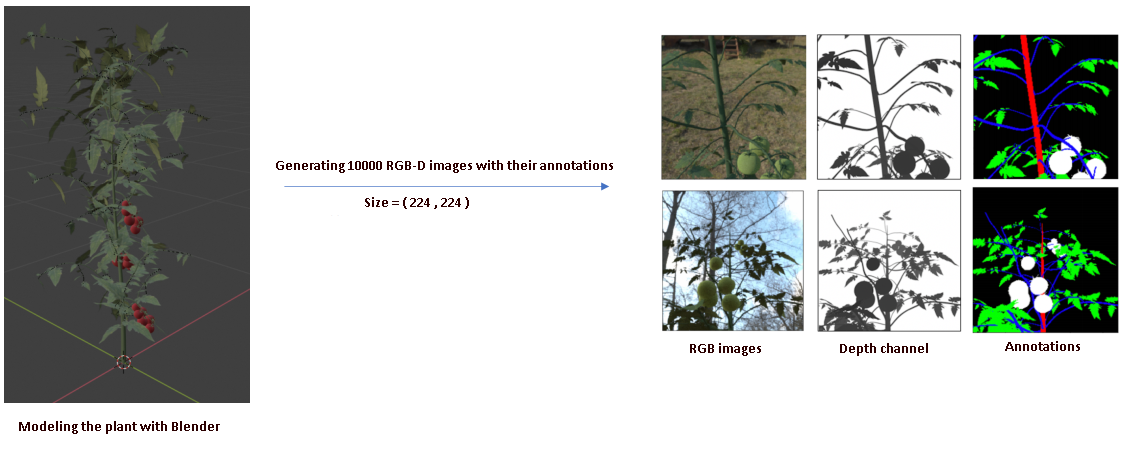

This project is about semantic segmentation of 4 classes of plant organs (stem, petiole, leaf and fruit) from RGB-D images using Pyhton 3, Tensorflow 2.0 and Keras. I worked with synthetic data, randomly generated and annotated with Blender and used a SegNet architecture trained from Scratch with 10000 images.

Dataset

It’s a synthetic dataset of plants image generated with Blender as shown below :

The Dataset is now available in Kaggle.

You can check also the public notebook i’ve made for this data set here.

1.Training & Evaluation

The model was trained on 10000 images ( 8000 images for training, 1000 images for validation and 1000 for test ) of size 224x224 on 4x12Go GPU, the curves of accuracy and loss were as follows :

Training accuracy = 99%

Validation accuracy = 98%

The curves of accuracy and loss are shown below :

2.Inference

The model was tested on 1000 RGBD images and with a test accuracy = 97,8% , here is some results:

3.Architecture

Here is a screenshot of one bloc of SegNet visualised in Tensorboard :

Conclusion

This project is about semantic segmentation of plant organs into 4 classes + Background using synthetic RGB-D images.

References

- SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation by Vijay Badrinarayanan, Alex Kendall, Roberto Cipolla, Senior Member, IEEE